Hi I have recently been experimenting with the amr simulator and have found a few features which I think would improve usability massively.

First is the ability to export the log, currently I have been using a third party app to rip the data from the tables so I can perform my own analysis. Eg looking at the damage spikes and consistent dps through plotting a rolling average like in Warcraft logs. The ability to download the data as a csv (or other file format) would be greatly appreciated.

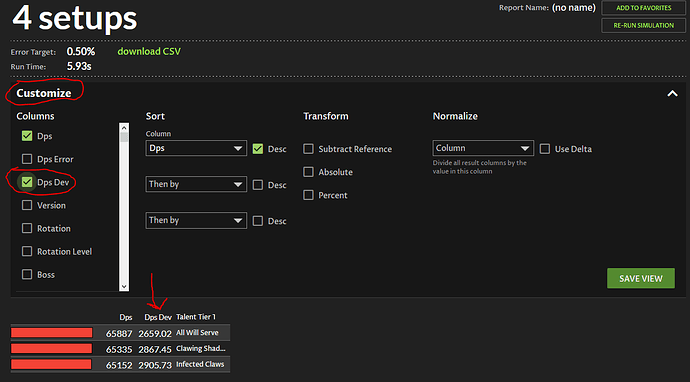

Secondly is a thought I had concerning the results that are displayed when multiple setups are tested. As although the average dps is shown the std deviation is not shown, and this can lead to misunderstanding in the relative rankings of the setups. Eg marginal average gains for huge swings in variance (mainly found using visions and specs like outlaw)

A solution to this could be just displaying this in the table. Or a thought I had was using the results and treating the various setups as normally distributed (or another if one is more appropriate) and displaying the chance that each setup is the top performing one.

Mathematical explanation here

So with two setups with example (not the greatest example but illustrates the point)

setup 1

Mean: 65,303

Std dev: 4919

setup 2

Mean: 61,480

Std dev: 3534

Now on first glance why would you ever pick option 2 its nearly 4k dps lower, but both strategies (in this case stellar flare vs starlord, using best setup for me using BiB) have wildly different std deviations

so calculation which is better

P(Setup 1 > Setup 2)

mean = 65,303 - 61480 = 3823

std dev = sqrt(4919^2+3534^2) = 6,057

using the method linked above

P(first setup does more dps than second setup ) = 1- P(X<-3823/6057)~=1-P(X<-0.6312) ~=1-0.264=0.736

So although the first setup does more dps 74% of the time the second option isn’t as nonviable as from first impressions.

(with some additional calculations you find that if setup 2 had a higher std dev it would do even better, although it will never will exceed setup 1)

Just a few thoughts I had, no idea of the difficulty of implementing it however, just thought it would be good especially for when people are modifying rotations as it gives what I feel is a better understanding of the impact you have made

Thanks,

Deathingames

EDIT: if this is implemented it can be hard to see the difference when multiple setups are being compared so a weighting based on the number of comparisons made could be used EG for the previous example

Setup 1 would get a score of

0.736*2 = 1.472

setup 2 would get

0.264*2 = 0.528

so a setup with a score of 1 would always be average